The systems, announced at Computex in Taipei, will power what the company calls ‘AI factories’.

Nvidia has unveiled new Blackwell-powered systems that, it said, will allow enterprises to “build AI factories and data centers to drive the next wave of generative AI breakthroughs.” The systems announced at the Computex trade show in Taipei have single or multiple GPUs, x86 or Grace-based processors, and air- or liquid-cooling to accommodate application requirements.

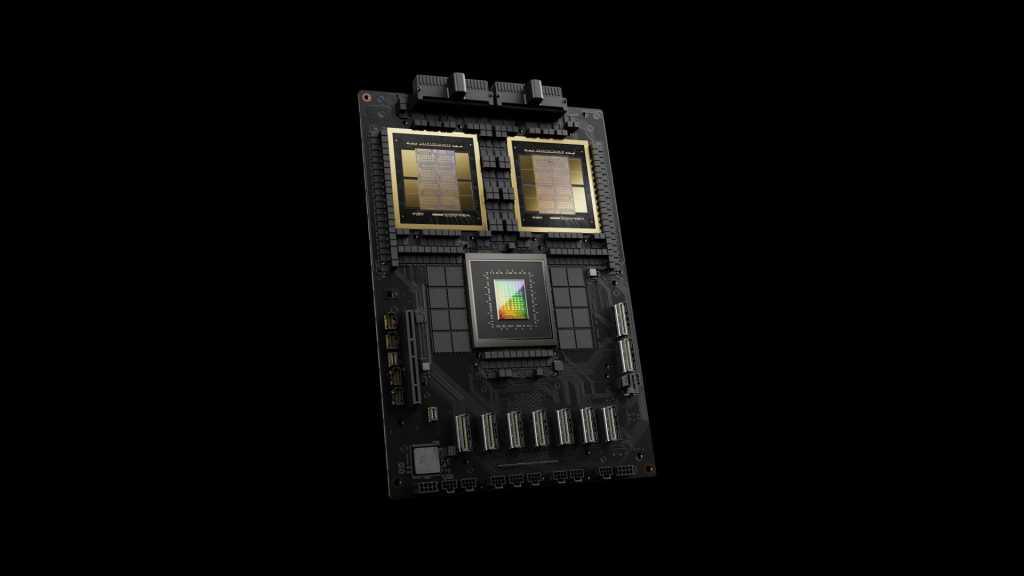

Nvidia also announced that its Nvidia MGX modular reference design platform, announced last year at Computex, now supports Blackwell products. It said during a media briefing ahead of the show that the new Nvidia GB200 NVL2 platform, a smaller version of the GB200 NVL72 introduced in March, will speed up data processing by up to 18x, with 8x better energy efficiency compared to using x86 CPUs, thanks to the Blackwell architecture.

Blackwell, announced at Nvidia’s GTC event in March, is the successor to Nvidia’s Hopper GPU architecture, while Grace is its Arm-based CPU design. The GB200 systems contain Blackwell GPUs and Grace CPUs.

“The GB200 NVL2 platform brings the era of GenAI to every datacenter,” said Dion Harris, Nvidia director of accelerated computing, during the briefing.

The company said that ten more partners now offer systems featuring the Blackwell architecture. ASRock Rack, Asus, Gigabyte, Ingrasys, Inventec, Pegatron, QCT, Supermicro, Wistron and Wiwynn will deliver cloud, on-premises, embedded, and edge AI systems using its GPUs and networking.

“Nvidia has come to dominate and shape the foundational architecture and infrastructure required for powering innovations in artificial intelligence,” said Thomas Randall, director of AI market research at Info-Tech Research Group, in an email. “Today’s announcements reinforce that claim, with Nvidia technology to be embedded at the heart of computer manufacturing, AI factories, and making generative AI broadly accessible to developers.”

Performance anxiety

This performance, however, will bring new worries for data center managers, said Alvin Nguyen, senior analyst at Forrester.

“I expect that Blackwell powered systems will be the preferred AI accelerator, just like the previous Nvidia accelerator,” Nguyen said in an email. “The higher power variants will require liquid cooling and that will force the changes in the data center market: Not all data centers can be updated to supply the power and water needed to support large amounts of these systems. This will drive spending on data center upgrades, new data centers, the use of partners (colocation, cloud services), and the adoption of less power-hungry accelerators from competitors.”

On the networking front, Nvidia announced that Spectrum-X, an accelerated networking platform designed for Ethernet-based AI clouds that was unveiled at Computex last year, is now generally available. Furthermore, Amit Katz, VP networking, said that Nvidia is accelerating the update cadence: New products will be released annually to provide increased bandwidth and ports, and enhanced software feature sets and programmability.

The platform architecture, with its DPUs (data processing units) optimized for north-south traffic and its SuperNIC optimized for east-west, GPU to GPU traffic, makes sense, Forrester’s Nguyen noted, but it does add complexity. “This is a complementary solution that will help drive the adoption of larger AI solutions like SuperPods and AI factories,” he said. “There is additional complexity using this, but it will ultimately help Nvidia customers who are implementing large scale AI infrastructure.”

But, he added, “Nvidia is pushing AI infrastructure with another technology standard that will end up being proprietary, but they need to do this to keep ahead of the competition “

Nvidia in the news: